All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have. The Llama2 7B model on huggingface meta-llamaLlama-2-7b has a pytorch pth file. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large. The Llama 2 release introduces a family of pretrained and fine-tuned LLMs ranging in scale from. Llama-27b that has 7 billion parameters. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple..

Open source free for research and commercial use Were unlocking the power of these large language models Our latest version of Llama Llama 2 is now accessible to individuals. WEB Why does it matter that Llama 2 isnt open source Firstly you cant just call something open source if it isnt even if you are Meta or a highly respected researcher in the field like. WEB Llama 2 The next generation of our open source large language model available for free for research and commercial use. Metas LLaMa 2 license is not Open Source OSI is pleased to see that Meta is lowering barriers for access to powerful AI systems. WEB Is Llama 2 open source What is the exact license these models are published under This is a bespoke commercial license that balances open access to the models with responsibility and..

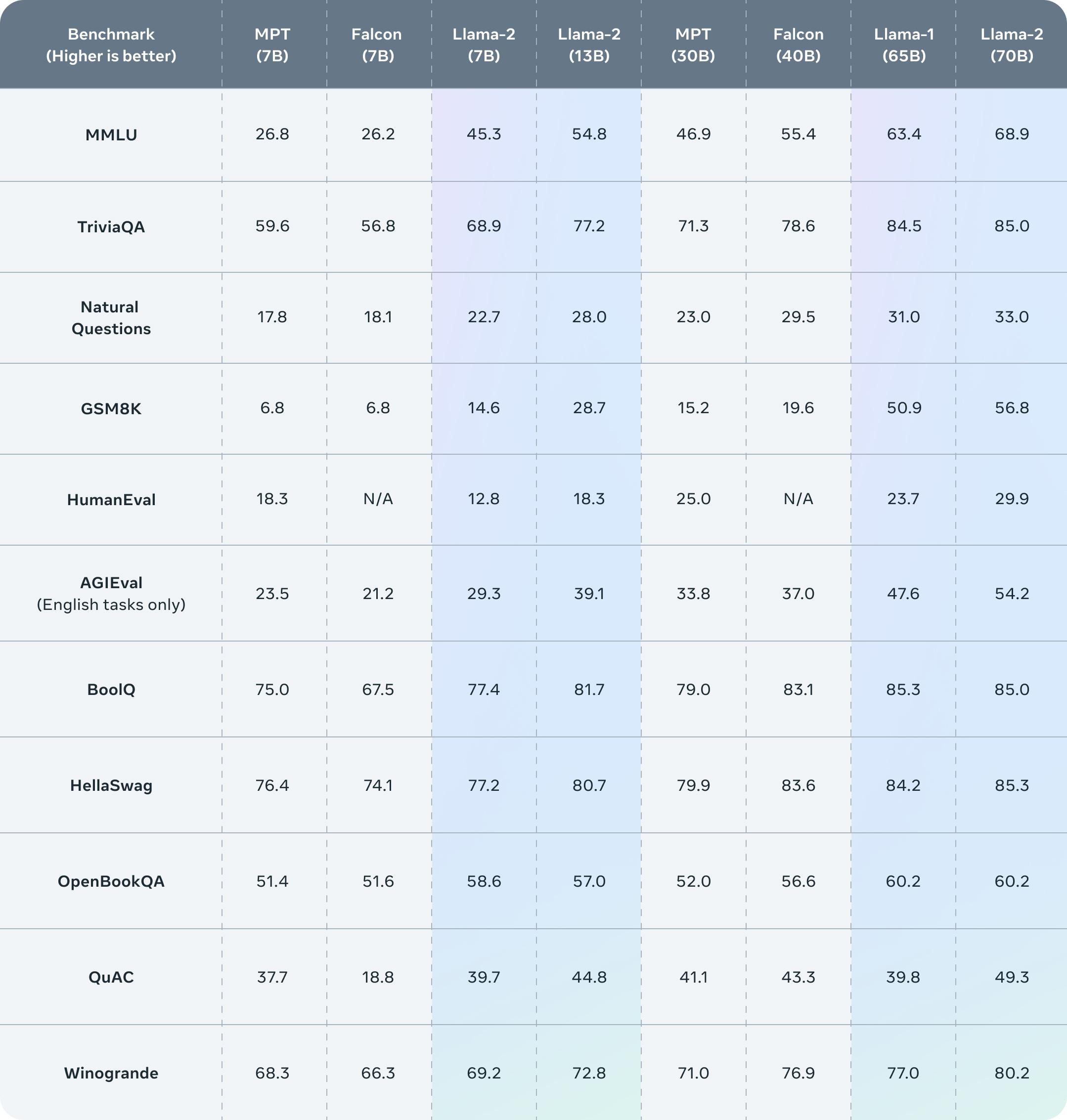

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. The Llama 2 release introduces a family of pretrained and fine-tuned LLMs ranging in scale from 7B to 70B parameters 7B 13B 70B. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters..

All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have double the context length of Llama 1 Llama 2 encompasses a series of. RTX 3060 GTX 1660 2060 AMD 5700 XT RTX 3050 AMD 6900 XT RTX 2060 12GB 3060 12GB 3080 A2000. How much RAM is needed for llama-2 70b 32k context Hello Id like to know if 48 56 64 or 92 gb is needed for a cpu. Even higher accuracy resource usage and slower inference. Explore all versions of the model their file formats like GGML GPTQ and HF and understand the hardware requirements for local..

Comments